This is a fixed-text formatted version of a Jupyter notebook

- Try online

- You can contribute with your own notebooks in this GitHub repository.

- Source files: spectrum_simulation.ipynb | spectrum_simulation.py

Spectrum simulation for CTA¶

A quick example how to use the functions and classes in gammapy.spectrum in order to simulate and fit spectra.

We will simulate observations for the Cherenkov Telescope Array (CTA) first using a power law model without any background. Than we will add a power law shaped background component. The next part of the tutorial shows how to use user defined models for simulations and fitting.

We will use the following classes:

- gammapy.spectrum.SpectrumDatasetOnOff

- gammapy.spectrum.SpectrumDataset

- gammapy.irf.load_cta_irfs

- gammapy.modeling.models.PowerLawSpectralModel

Setup¶

Same procedure as in every script …

[1]:

%matplotlib inline

import matplotlib.pyplot as plt

[2]:

import numpy as np

import astropy.units as u

from gammapy.spectrum import (

SpectrumDatasetOnOff,

CountsSpectrum,

SpectrumDataset,

)

from gammapy.modeling import Fit, Parameter

from gammapy.modeling.models import PowerLawSpectralModel, SpectralModel

from gammapy.irf import load_cta_irfs

Simulation of a single spectrum¶

To do a simulation, we need to define the observational parameters like the livetime, the offset, the assumed integration radius, the energy range to perform the simulation for and the choice of spectral model. This will then be convolved with the IRFs, and Poission fluctuated, to get the simulated counts for each observation.

[3]:

# Define simulation parameters parameters

livetime = 1 * u.h

offset = 0.5 * u.deg

integration_radius = 0.1 * u.deg

# Energy from 0.1 to 100 TeV with 10 bins/decade

energy = np.logspace(-1, 2, 31) * u.TeV

solid_angle = 2 * np.pi * (1 - np.cos(integration_radius)) * u.sr

[4]:

# Define spectral model - a simple Power Law in this case

model_ref = PowerLawSpectralModel(

index=3.0,

amplitude=2.5e-12 * u.Unit("cm-2 s-1 TeV-1"),

reference=1 * u.TeV,

)

print(model_ref)

PowerLawSpectralModel

Parameters:

name value error unit min max frozen

--------- --------- ----- -------------- --- --- ------

index 3.000e+00 nan nan nan False

amplitude 2.500e-12 nan cm-2 s-1 TeV-1 nan nan False

reference 1.000e+00 nan TeV nan nan True

Get and set the model parameters after initialising¶

The model parameters are stored in the Parameters object on the spectal model. Each model parameter is a Parameter instance. It has a value and a unit attribute, as well as a quantity property for convenience.

[5]:

print(model_ref.parameters)

Parameters

index : 3.000

amplitude : 2.50e-12 1 / (cm2 s TeV)

reference (frozen) : 1.000 TeV

[6]:

print(model_ref.parameters["index"])

model_ref.parameters["index"].value = 2.1

print(model_ref.parameters["index"])

Parameter(name='index', value=3.0, factor=3.0, scale=1.0, unit=Unit(dimensionless), min=nan, max=nan, frozen=False)

Parameter(name='index', value=2.1, factor=2.1, scale=1.0, unit=Unit(dimensionless), min=nan, max=nan, frozen=False)

[7]:

# Load IRFs

filename = (

"$GAMMAPY_DATA/cta-1dc/caldb/data/cta/1dc/bcf/South_z20_50h/irf_file.fits"

)

cta_irf = load_cta_irfs(filename)

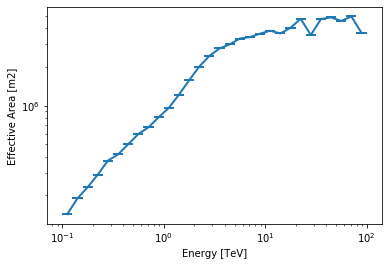

A quick look into the effective area and energy dispersion:

[8]:

aeff = cta_irf["aeff"].to_effective_area_table(offset=offset, energy=energy)

aeff.plot()

plt.loglog()

print(cta_irf["aeff"].data)

NDDataArray summary info

MapAxis

name : energy

unit : 'TeV'

nbins : 42

node type : edges

edges min : 1.3e-02 TeV

edges max : 2.0e+02 TeV

interp : log

MapAxis

name : offset

unit : 'deg'

nbins : 6

node type : edges

edges min : 0.0e+00 deg

edges max : 6.0e+00 deg

interp : lin

Data : size = 252, min = 0.000 m2, max = 5371581.000 m2

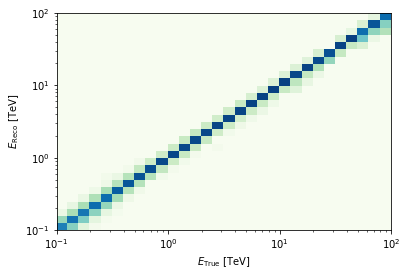

[9]:

edisp = cta_irf["edisp"].to_energy_dispersion(

offset=offset, e_true=energy, e_reco=energy

)

edisp.plot_matrix()

print(edisp.data)

/Users/adonath/github/adonath/gammapy/gammapy/utils/interpolation.py:159: Warning: Interpolated values reached float32 precision limit

"Interpolated values reached float32 precision limit", Warning

NDDataArray summary info

MapAxis

name : e_true

unit : 'TeV'

nbins : 30

node type : edges

edges min : 1.0e-01 TeV

edges max : 1.0e+02 TeV

interp : log

MapAxis

name : e_reco

unit : 'TeV'

nbins : 30

node type : edges

edges min : 1.0e-01 TeV

edges max : 1.0e+02 TeV

interp : log

Data : size = 900, min = 0.000, max = 0.926

[10]:

dataset = SpectrumDataset(

aeff=aeff, edisp=edisp, model=model_ref, livetime=livetime, name="obs-0"

)

dataset.fake(random_state=42)

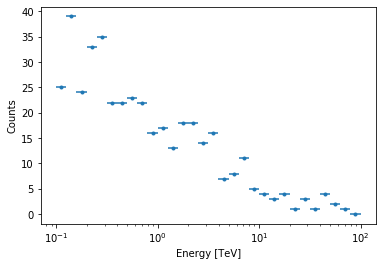

[11]:

# Take a quick look at the simulated counts

dataset.counts.plot()

[11]:

<matplotlib.axes._subplots.AxesSubplot at 0x1245339e8>

Include Background¶

In this section we will include a background component extracted from the IRF. Furthermore, we will also simulate more than one observation and fit each one individually in order to get average fit results.

[12]:

# We assume a PowerLawSpectralModel shape of the background as well

bkg_data = (

cta_irf["bkg"].evaluate_integrate(

fov_lon=0 * u.deg, fov_lat=offset, energy_reco=energy

)

* solid_angle

* livetime

)

bkg = CountsSpectrum(

energy[:-1], energy[1:], data=bkg_data.to_value(""), unit=""

)

[13]:

dataset = SpectrumDatasetOnOff(

aeff=aeff,

edisp=edisp,

model=model_ref,

livetime=livetime,

acceptance=1,

acceptance_off=5,

)

[14]:

%%time

# Now simulate 30 indepenent spectra using the same set of observation conditions.

n_obs = 100

seeds = np.arange(n_obs)

datasets = []

for idx in range(n_obs):

dataset.fake(random_state=idx, background_model=bkg)

datasets.append(dataset.copy())

CPU times: user 184 ms, sys: 3.48 ms, total: 188 ms

Wall time: 188 ms

Before moving on to the fit let’s have a look at the simulated observations.

[15]:

n_on = [dataset.counts.data.sum() for dataset in datasets]

n_off = [dataset.counts_off.data.sum() for dataset in datasets]

excess = [dataset.excess.data.sum() for dataset in datasets]

fix, axes = plt.subplots(1, 3, figsize=(12, 4))

axes[0].hist(n_on)

axes[0].set_xlabel("n_on")

axes[1].hist(n_off)

axes[1].set_xlabel("n_off")

axes[2].hist(excess)

axes[2].set_xlabel("excess");

Now, we fit each simulated spectrum individually

[16]:

%%time

results = []

for dataset in datasets:

dataset.model = model_ref.copy()

fit = Fit([dataset])

result = fit.optimize()

results.append(

{

"index": result.parameters["index"].value,

"amplitude": result.parameters["amplitude"].value,

}

)

CPU times: user 3.38 s, sys: 19.3 ms, total: 3.39 s

Wall time: 3.41 s

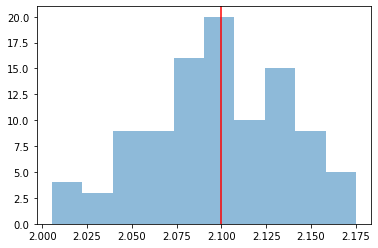

We take a look at the distribution of the fitted indices. This matches very well with the spectrum that we initially injected, index=2.1

[17]:

index = np.array([_["index"] for _ in results])

plt.hist(index, bins=10, alpha=0.5)

plt.axvline(x=model_ref.parameters["index"].value, color="red")

print("spectral index: {:.2f} +/- {:.2f}".format(index.mean(), index.std()))

spectral index: 2.10 +/- 0.04

Adding a user defined model¶

Many spectral models in gammapy are subclasses of SpectralModel. The list of available models is shown below.

[18]:

SpectralModel.__subclasses__()

[18]:

[gammapy.modeling.models.spectral.ConstantSpectralModel,

gammapy.modeling.models.spectral.CompoundSpectralModel,

gammapy.modeling.models.spectral.PowerLawSpectralModel,

gammapy.modeling.models.spectral.PowerLaw2SpectralModel,

gammapy.modeling.models.spectral.ExpCutoffPowerLawSpectralModel,

gammapy.modeling.models.spectral.ExpCutoffPowerLaw3FGLSpectralModel,

gammapy.modeling.models.spectral.SuperExpCutoffPowerLaw3FGLSpectralModel,

gammapy.modeling.models.spectral.SuperExpCutoffPowerLaw4FGLSpectralModel,

gammapy.modeling.models.spectral.LogParabolaSpectralModel,

gammapy.modeling.models.spectral.TemplateSpectralModel,

gammapy.modeling.models.spectral.ScaleSpectralModel,

gammapy.modeling.models.spectral.AbsorbedSpectralModel,

gammapy.modeling.models.spectral.NaimaSpectralModel,

gammapy.modeling.models.spectral.GaussianSpectralModel,

gammapy.modeling.models.spectral.LogGaussianSpectralModel,

gammapy.modeling.models.spectral_crab.MeyerCrabSpectralModel]

This section shows how to add a user defined spectral model.

To do that you need to subclass SpectralModel. All SpectralModel subclasses need to have an __init__ function, which sets up the Parameters of the model and a static function called evaluate where the mathematical expression for the model is defined.

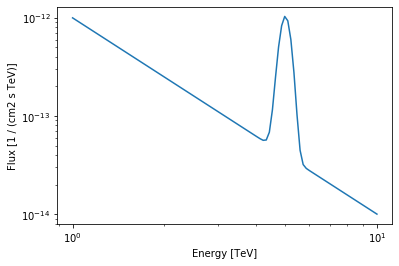

As an example we will use a PowerLawSpectralModel plus a Gaussian (with fixed width).

[19]:

class UserModel(SpectralModel):

def __init__(self, index, amplitude, reference, mean, width):

super().__init__(

[

Parameter("index", index, min=0),

Parameter("amplitude", amplitude, min=0),

Parameter("reference", reference, frozen=True),

Parameter("mean", mean, min=0),

Parameter("width", width, min=0, frozen=True),

]

)

@staticmethod

def evaluate(energy, index, amplitude, reference, mean, width):

pwl = PowerLawSpectralModel.evaluate(

energy=energy,

index=index,

amplitude=amplitude,

reference=reference,

)

gauss = amplitude * np.exp(-(energy - mean) ** 2 / (2 * width ** 2))

return pwl + gauss

[20]:

model = UserModel(

index=2,

amplitude=1e-12 * u.Unit("cm-2 s-1 TeV-1"),

reference=1 * u.TeV,

mean=5 * u.TeV,

width=0.2 * u.TeV,

)

print(model)

UserModel

Parameters:

name value error unit min max frozen

--------- --------- ----- -------------- --------- --- ------

index 2.000e+00 nan 0.000e+00 nan False

amplitude 1.000e-12 nan cm-2 s-1 TeV-1 0.000e+00 nan False

reference 1.000e+00 nan TeV nan nan True

mean 5.000e+00 nan TeV 0.000e+00 nan False

width 2.000e-01 nan TeV 0.000e+00 nan True

[21]:

energy_range = [1, 10] * u.TeV

model.plot(energy_range=energy_range);

Exercises¶

- Change the observation time to something longer or shorter. Does the observation and spectrum results change as you expected?

- Change the spectral model, e.g. add a cutoff at 5 TeV, or put a steep-spectrum source with spectral index of 4.0

- Simulate spectra with the spectral model we just defined. How much observation duration do you need to get back the injected parameters?

What next?¶

In this tutorial we simulated and analysed the spectrum of source using CTA prod 2 IRFs.

If you’d like to go further, please see the other tutorial notebooks.